What is an Interrupt??

An interrupt is a signal (an

"interrupt request") generated by some event external to the CPU , which causes the CPU to stop what it is doing

(stop executing the code it is currently running) and jump to a separate piece

of code designed by the programmer to deal with

the event which generated the interrupt request.

Interrupts

are an essential feature of a micro controller. They enable the software to

respond, in a timely fashion, to internal and external hardware events.

For

example, the reception and transmission of bytes via the SCI is more efficient

(in terms of processor time) using interrupts, rather than using a polling

method.

Performance is improved because tasks can be given to hardware modules which “report back” when they are finished.

Performance is improved because tasks can be given to hardware modules which “report back” when they are finished.

Using

interrupts requires that we understand how a CPU processes an interrupt so that

we can configure our software to take advantage of them.

An

interrupt is an event triggered inside the micro controller, either by internal

and external hardware, that initiates the automatic transfer of software

execution to an interrupt service routine (ISR). On completion of the ISR,

software execution returns to the next instruction that would have occurred

without the interrupt.

Some

interrupts share the same interrupt vector. For example, the reception and

transmission of a byte via the SCI leads to just one interrupt, and there is

one vector associated with it. The two interrupt sources are ORed together to

create one interrupt request. In such cases, the ISR is responsible for polling

the status flags to see which event actually triggered the interrupt. Care must

be serviced by the software.

Process of Interrupt Handling:-

When

an interrupt occurs,

· Disable the other

interrupts

· Save the Context

· Control goes to

corresponding interrupt handler

· Interrupt Service

Routine (ISR) is executed

· After executing,

restore the context

· Enable the interrupts

· Return to task.

Many embedded systems are

called interrupt driven systems,

because most of the processing occurs in ISRs, and the embedded system spends

most of its time in a low-power mode.

Sometimes ISR may be split

into two parts: top-half (fast interrupt handler, First-Level Interrupt Handler

(FLIH)) and bottom-half (slow interrupt handler, Second-Level Interrupt

Handlers (SLIH)). Top-half is a faster part of ISR which should quickly store minimal information about interrupt and schedule

slower bottom-half at a later time.

Mainly, there are two types of interrupts: Hardware and Software. Software interrupts are called

from software, using a specified command. Hardware interrupts are triggered by

peripheral devices outside the microcontroller.

For instance, your embedded system may contain a timer that

sends a pulse to the controller every second. Your microcontroller would wait

until this pulse is received, and when the pulse comes, an interrupt would be

triggered that would handle the signal.

An interrupt vector is an important part of interrupt

service mechanism, which associates a processor.

Processor first saves program counter and/or other

registers of CPU on Interrupt and then loads vector address into the program

counter.

Vector address provides either the ISR or ISR address to

the processor for the interrupt source or group of sources or given interrupt

type.

System software designer puts the bytes at a ISR_VECTADDR

address.

The bytes are for

either:-

· The ISR short code or jump

instruction to ISR instruction or

· ISR short code with call to the

full code of the ISR at an ISR address or

· Bytes points to an ISR address

Interrupt Latency:-

When an interrupt occurs the service of the interrupt by

executing the ISR may not start immediately by context switching. The interval

between the occurrence of an interrupt and start of execution of the ISR is

called Interrupt Latency.

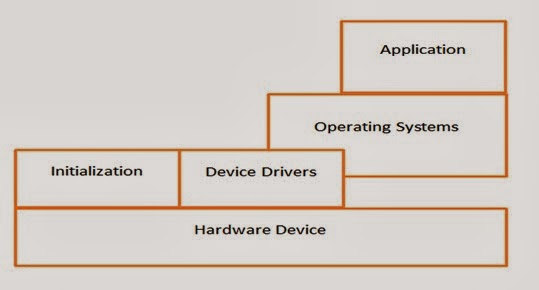

Depth

of Interrupt handling mechanism:-

The three main types of interrupts are software, internal

hardware and external hardware. Software interrupts are explicitly triggered

internally by some instruction within the current instruction stream being

executed by the master processor.

Internal hardware interrupts, on the other hand, are

initiated by an event that is result of a problem with the current instruction

stream that is being executed by the master processor because of the features

of the hardware, such as illegal math operations (overflow, divide-by-zero), debugging

(single stepping, break-points), and invalid instructions (opcodes).

Interrupts that are raised (requested) by some internal

event to the master processor (basically software and internal hardware

interrupts) are also commonly referred to as exceptions or traps.

Exceptions are internally generated hardware interrupts

triggered by errors that are detected by the master processor during software

execution, such as invalid data or a divide by zero. How exceptions are

prioritized and processed is determined by the architecture.

Traps are software interrupts specifically generated by the

software, via an exception instruction. Finally, external hardware interrupts

initiated by hardware other than master CPU (board buses, I/O etc.).

For interrupts that are raised by external events, the

master processor is either wired via an input pins called an IRQ (Interrupt

request level) pin or port, to outside intermediary hardware (e.g. interrupt

controllers), or directly to other components on the board with dedicated

interrupt ports, that signal the master CPU when they want to raise the

interrupt.

These types of interrupts are triggered in one of two ways

: level triggered or edge triggered. A level triggered interrupt is initiated

when IRQ signal is at a certain level (i.e. HIGH or LOW). These interrupts are

processed when the CPU finds arequest for level-triggered interrupt when

sampling its IRQ line, such as at the end of processing each instruction.

Edge-triggered

interrupts are triggered when a change occurs on the IRQ line (from LOW to

HIGH/rising edge of signal or from HIGH to LOW/falling edge of signal). Once

triggered, these interrupts latch into the CPU until processed.

Both

types of interrupts have their strengths and drawbacks. With a level-triggered

interrupt, if the request is being processed and has not been disabled before

the next sampling period, the CPU will try to service the same interrupt again.

On the flip side, if the level-triggered interrupt were triggered and then

disabled before the CPU’s sample period, the CPU would never note its existence

and would therefore never process it.

Edge-triggered

interrupts could have problems if they share the same IRQ line, if they were

triggered in the same manner at about the same time (say before the CPU could

process the first interrupt), resulting in the CPU being able to detect only

one of the interrupts.

Because

of these drawbacks, level-triggered interrupts are generally recommended for

interrupts that share IRQ lines, whereas edge-triggered interrupts are

typically recommended for interrupt signals that are very short or very long.

At

the point an IRQ of a master processor receives a

signal that an interrupt has been raised, the interrupt is processed by the

interrupt-handling mechanisms within the system. These mechanisms are made up

of a combination of both hardware and software

components.

In

terms of hardware, an interrupt controller can be integrated onto a board, or

within a processor, to mediate interrupt transactions in conjunction with

software.

Interrupt

acknowledgment (IACK) is typically handled by the master processor when an

external device triggers an interrupt. Because IACK cycles are a function of

the local bus, the IACK function of the master CPU depends on interrupt

policies of system buses, as well as the interrupt policies of components

within the system that trigger the interrupts. With respect to the external

device triggering an interrupt, the interrupt scheme depends on whether that

device can provide an interrupt vector (a place in memory that holds the

address of an interrupt’s ISR (Interrupt Service Routine), the software that

the master CPU executes after the triggering of an interrupt).

For devices that cannot provide an interrupt

vector, referred to as non-vectored interrupts, master processors implement an

auto-vectored interrupt scheme in which one ISR is shared by the non-vectored

interrupts; determining which specific interrupt to handle, interrupt

acknowledgment, etc., are all handled by the ISR software.

An

interrupt-vectored scheme is implemented to support

peripherals that can provide an interrupt vector

over a bus and where acknowledgment is automatic. An IACK-related register on

the master CPU informs the device requesting the interrupt to stop requesting

interrupt service, and provides what the master processor needs to process the

correct interrupt (such as the interrupt number and vector number).

Based upon the activation of an external

interrupt pin, an interrupt controller’s interrupt select register, a device’s

interrupt select register, or some combination of the above, the master

processor can determine which ISR to execute. After the ISR completes, the

master processor resets the interrupt status by adjusting the bits in the

processor’s status register or an interrupt mask in the external interrupt

controller. The interrupt request and acknowledgment mechanisms are determined

by the device requesting the interrupt (since it determines which interrupt

service to trigger), the master processor, and the system

bus protocols.

Because

there are potentially multiple components on an embedded

board that may need to request interrupts, the scheme that manages all

of the different types of interrupts is priority- based. This means that all

available interrupts within a processor have an associated interrupt level,

which is the priority of that interrupt within the system.

Typically,

interrupts starting at level “1” are the highest priority within the system and

incrementally from there (2, 3, 4, etc.) the priorities of the associated

interrupts decrease. Interrupts with higher levels have precedence over any

instruction stream being executed by the master processor, meaning that not

only do interrupts have precedence over the main program, but higher priority

interrupts have priority over interrupts with lower priorities as well.

When

an interrupt is triggered, lower priority interrupts are typically masked,

meaning they are not allowed to trigger when the system is handling a higher-

priority interrupt. The interrupt with the highest priority is usually called

an NMI.

How

the components are prioritized depends on the IRQ line they are connected to,

in the case of external devices, or what has been assigned

by the processor design. It is the master

processor’s internal design that determines the number of external interrupts

available and the interrupt levels supported within an embedded

system.

Several

different priority schemes are implemented in the

various architectures. These schemes commonly fall under one of three models:

the equal single level, where the latest interrupt to be triggered gets the CPU; the static multilevel,

where priorities are assigned by a priority encoder, and the interrupt with the

highest priority gets the CPU; and the dynamic multilevel, where a priority

encoder assigns priorities and the priorities are reassigned when a new

interrupt is triggered.

After the

hardware mechanisms have determined which interrupt to handle and have

acknowledged the interrupt, the current instruction stream is halted and a

context switch is performed, a process in which the master processor switches

from executing the current instruction stream to another set of instructions.

This

alternate set of instructions being executed as the result of an interrupt is

the ISR or interrupt handler. An ISR is simply a fast, short program that is

executed when an interrupt is triggered.

The

specific ISR executed for a particular interrupt depends on whether a

non-vectored or vectored scheme is in place. In the case of a non-vectored

interrupt, a memory location contains the start of an ISR that the PC (program

counter) or some similar mechanism branches to for all non-vectored interrupts.

The ISR code then determines the source of the interrupt and provides the

appropriate processing. In a vectored scheme, typically an interrupt vector

table contains the address of the ISR.

The steps

involved in an interrupt context switch include stopping the current program’s

execution of instructions, saving the context information (registers, the PC,

or similar mechanism that indicates where the processor should jump back to

after executing the ISR) onto a stack, either dedicated or shared with other

system software, and perhaps the disabling of other interrupts. After the

master processor finishes executing the ISR, it context switches back to the

original instruction stream that had been interrupted, using the context

information as a guide.

The

performance of an embedded design is affected by

the latencies (delays) involved with the interrupt-handling scheme. The

interrupt latency is essentially the time from when an interrupt is triggered

until its ISR starts executing. The master CPU, under normal circumstances,

accounts for a lot of overhead for the time it takes to process the interrupt

request and acknowledge the interrupt, obtaining an interrupt vector (in a

vectored scheme), and context switching to the ISR.

In

the case when a lower-priority interrupt is triggered during the processing of

a higher priority interrupt, or a higher priority interrupt is triggered during

the processing of a lower priority interrupt, the interrupt latency for the

original lower priority interrupt increases to include the time in which the

higher priority interrupt is handled.

Within

the ISR itself, additional overhead is caused by the context information being

stored at the start of the ISR and retrieved at the end of the ISR. The time to

context switch back to the original instruction

stream that the CPU was executing before the

interrupt was triggered also adds to the overall interrupt execution time.

While

the hardware aspects of interrupt handling (the context switching, processing

interrupt requests, etc.) are beyond the software’s control, the overhead

related to when the context information is saved, as well as how the ISR is

written both in terms of the programming language used and the size, are under

the software’s control.

Smaller

ISRs, or ISRs written in a lower-level language like assembly, as opposed to

larger ISRs or ISRs written in higher-level languages like Java, or

saving/retrieving less context information at the start and end of an ISR, can

all decrease the interrupt handling execution time and increase performance.